Privacy Concerns in AI Development

Privacy is one of the most significant issues faced in the continuous evolution of artificial intelligence. As AI systems become more capable of collecting, analyzing, and processing vast amounts of data, the risk to individuals’ privacy grows. This concerns not just the protection of personally identifiable information, but also the ethical obligations technology developers have towards their users. Understanding the nuances of privacy challenges in AI development is essential for establishing trust, ensuring compliance, and promoting responsible innovation.

Personal Data Collection in AI Systems

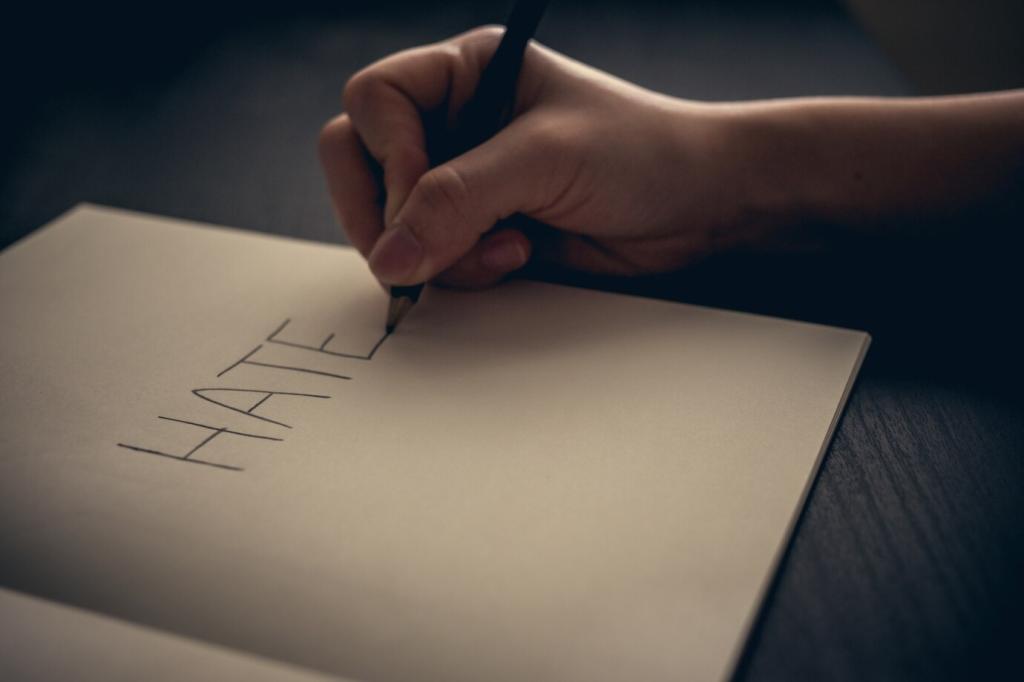

Many AI models depend on detailed datasets scraped from sources such as social media, public records, and commercial transactions. Often, individuals whose data becomes part of such datasets are unaware of the ways in which their information is being used. Unlike traditional data collection, AI can aggregate information from seemingly unrelated sources, creating comprehensive profiles that go far beyond what users might have reasonably expected. This aggregation can reveal intimate details about individuals, raising significant ethical and legal questions about the right to privacy in the digital age.

Securing meaningful consent for data collection is a considerable challenge in AI development. Consent forms are often lengthy, filled with jargon, and presented as a prerequisite for service, effectively compelling users to agree without fully understanding the extent of data sharing. This lack of transparency erodes trust and can result in users unknowingly handing over sensitive information. AI systems that continue to learn and adapt may also use data far beyond the scope originally agreed upon, complicating the principle of consent still further.

AI’s ability to monitor behavior and infer personal traits enables new forms of surveillance and profiling. Systems like facial recognition or behavioral tracking can monitor movements or predict preferences, often without the informed consent of those being observed. This level of scrutiny can chill free expression and personal autonomy, especially when combined with state or corporate surveillance initiatives. The resulting power asymmetry between data gatherers and the subjects of data collection raises profound questions about the boundaries of privacy in modern society.

Data Security and Breach Potential

Vulnerabilities in AI Infrastructure

AI-driven platforms rely on complex infrastructure, which can include cloud services, third-party APIs, and interconnected databases. Each of these components may introduce security vulnerabilities, ranging from misconfigured servers to unpatched software flaws. Sophisticated hackers may exploit these weaknesses to gain unauthorized access to data, putting sensitive personal information at risk. Ensuring robust security across the entire AI pipeline requires a multi-layered approach that addresses both technical and human factors in system design and maintenance.

Consequences of Data Breaches

When AI systems experience a data breach, the consequences can be severe and far-reaching. Unlike breaches affecting traditional databases, those involving AI often contain highly granular and contextual information about individuals, making them even more damaging. The fallout from such incidents may include identity theft, financial loss, and long-term erosion of consumer trust. Organizations may also face legal penalties and heightened regulatory scrutiny, emphasizing the critical importance of proactive data protection measures throughout AI development.

Challenges in Data Anonymization

A common strategy for protecting privacy in AI is data anonymization—removing or obfuscating identifying details before analysis. However, AI algorithms are increasingly capable of re-identifying individuals by analyzing patterns in so-called anonymized datasets. The process of truly de-identifying data is technically complex, and even small oversights can render these efforts ineffective. This ongoing “cat-and-mouse” dynamic highlights the need for more advanced privacy-preserving techniques in AI and more diligent stewardship of personal data.

Previous slide

Next slide